Use Case 8

AR-assisted emergency surgical care

Surgeons should play a central role in disaster planning and management due to the overwhelming number of bodily injuries that are typically involved during most forms of disaster. In fact, various types of surgical procedures are performed by emergency medical teams after sudden-onset disasters, such as soft tissue wounds, orthopaedic traumas, abdominal surgeries, etc [1,2]. HMD-based Augmented Reality (AR), using state-of-the-art hardware such as the Magic Leap or the Microsoft HoloLens, have long been foreseen as a key enabler for clinicians in surgical use cases [3] especially for procedures performed outside of the operating room. In such condtions, monolithic HMD applications fail to maintain important factors such as user-mobility, battery life, and Quality of Experience (QoE), leading to considering a distributed cloud/edge software architecture. Toward this end, 5G and cloud computing will be a central component in accelerating the process of remote rendering computations and image transfers to wearable AR devices.

ORamaVR leads the Use Case (UC) ”AR-assisted emergency surgical care”, identified in the context of the 5G-EPICENTRE EU-funded project. Specifically, the UC will experiment with holographic AR technology for emergency medical surgery teams, by overlaying deformable medical models directly on top of the patient body parts, effectively enabling surgeons to see inside (visualizing bones, blood vessels, etc.) and perform surgical actions following step-by-step instructions (see Figure 1).

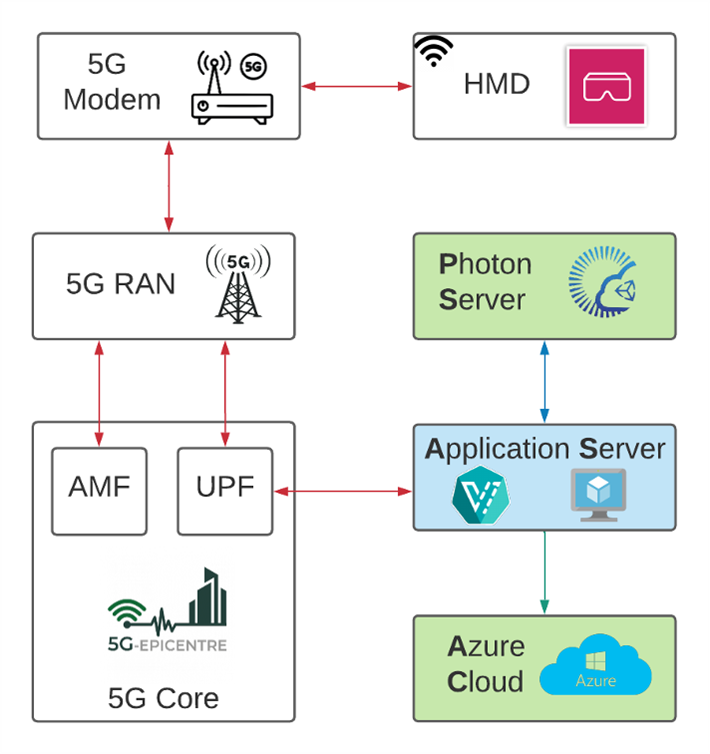

The goal is to combine the computational and data-intensive nature of AR and Computer Vision algorithms with upcoming 5G network architectures deployed for edge computing so as to satisfy real-time interaction requirements and provide an efficient and powerful platform for the pervasive promotion of such applications. Toward this end, the authors have adapted the psychomotor Virtual Reality (VR) surgical training solution, namely MAGES [4,5], developed by the ORamaVR company. By developing the necessary Virtual Network Functions (VNFs) to manage data-intensive services (e.g., prerendering, caching, compression) and by exploiting available network resources and Multi-access Edge Computing (MEC) support, provided by the 5G-EPICENTRE infrastructure, this UC aims to provide powerful AR-based tools, usable on site, to first-aid responders (see Figure 2 for an overview of the UC NetApps layout).

[1] C. A. Coventry, A. I. Vaska, A. J. Holland, D. J. Read, and R. Q. Ivers, “Surgical procedures performed by emergency medical teams in sudden- onset disasters: a systematic review,” World journal of surgery, vol. 43, no. 5, pp. 1226–1231, 2019.

[2] T. Birrenbach, J. Zbinden, G. Papagiannakis, A. K. Exadaktylos, M. M ̈uller, W. E. Hautz, and T. C. Sauter, “Effectiveness and utility of virtual reality simulation as an educational tool for safe performance of covid-19 diagnostics: Prospective, randomized pilot trial,” JMIR Serious Games, vol. 9, no. 4, p. e29586, Oct 2021. [Online]. Available: https://games.jmir.org/2021/4/e29586

[3] P. Zikas, S. Kateros, N. Lydatakis, M. Kentros, E. Geronikolakis, M. Kamarianakis, G. Evangelou, I. Kartsonaki, A. Apostolou, T. Birrenbach et al., “Virtual reality medical training for covid-19 swab testing and proper handling of personal protective equipment: Development and usability,” Frontiers in Virtual Reality, p. 175, 2022.

[4] G. Papagiannakis, P. Zikas, N. Lydatakis, S. Kateros, M. Kentros, E. Geronikolakis, M. Kamarianakis, I. Kartsonaki, and G. Evangelou, “Mages 3.0: Tying the knot of medical VR,” in ACM SIGGRAPH 2020 Immersive Pavilion, 2020, pp. 1–2.

[5] G. Papagiannakis, N. Lydatakis, S. Kateros, S. Georgiou, and P. Zikas, “Transforming medical education and training with VR using MAGES,” in SIGGRAPH Asia 2018 Posters, 2018, pp. 1–2.